ChatGPT is enjoyable to play with. Likelihood is, you additionally need to have your individual copy working privately. Realistically, that’s unimaginable as a result of ChatGPT shouldn’t be a software program for obtain, and it wants great laptop energy to run. However you may construct a trimmed-down model that may run on commodity {hardware}. On this publish, you’ll find out about

- What are language fashions that may behave like ChatGPT

- The best way to construct a chatbot utilizing the superior language fashions

Constructing Your mini-ChatGPT at House

Image generated by the writer utilizing Secure Diffusion. Some rights reserved.

Let’s get began.

Overview

This publish is split into three components; they’re:

- What are Instruction-Following Fashions?

- The best way to Discover Instruction Following Fashions

- Constructing a Easy Chatbot

What are Instruction-Following Fashions?

Language fashions are machine studying fashions that may predict phrase chance primarily based on the sentence’s prior phrases. If we ask the mannequin for the subsequent phrase and feed it again to the mannequin regressively to ask for extra, the mannequin is doing textual content technology.

Textual content technology mannequin is the thought behind many giant language fashions corresponding to GPT3. Instruction-following fashions, nonetheless, are fine-tuned textual content technology fashions that find out about dialog and directions. It’s operated as a dialog between two folks, and when one finishes a sentence, one other particular person responds accordingly.

Subsequently, a textual content technology mannequin might help you end a paragraph with a number one sentence. However an instruction following mannequin can reply your questions or reply as requested.

It doesn’t imply you can not use a textual content technology mannequin to construct a chatbot. However it is best to discover a higher high quality end result with an instruction-following mannequin, which is fine-tuned for such use.

The best way to Discover Instruction Following Fashions

Chances are you’ll discover numerous instruction following fashions these days. However to construct a chatbot, you want one thing you may simply work with.

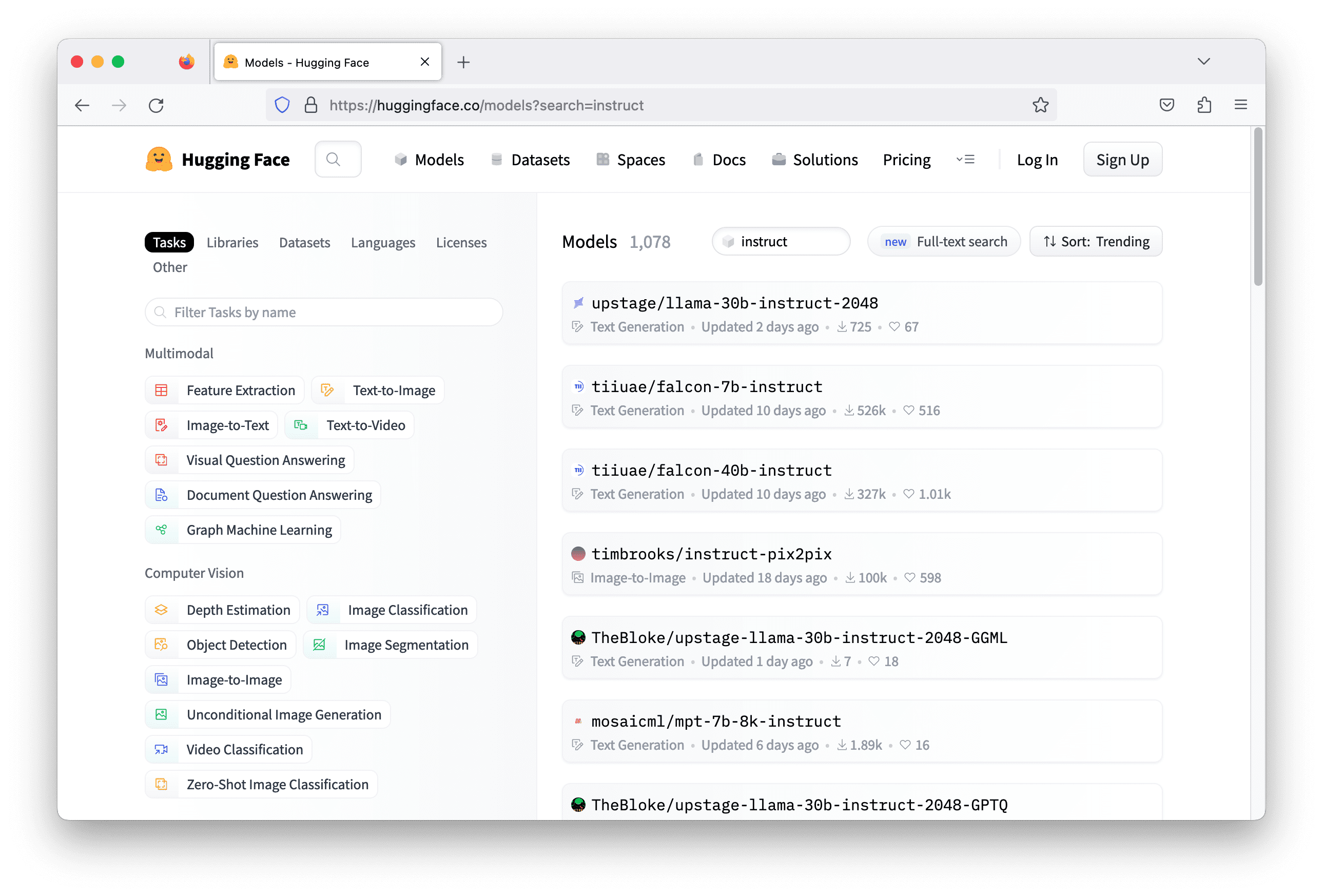

One helpful repository you could search on is Hugging Face. The fashions there are supposed to make use of with the transformers library from Hugging Face. It’s useful as a result of totally different fashions may match barely in a different way. It will be tedious to make your Python code to help a number of fashions, however the transformers library unified them and conceal all these variations out of your code.

Often, the instruction following fashions carries the key phrase “instruct” within the mannequin identify. Looking out with this key phrase on Hugging Face can provide you greater than a thousand fashions. However not all can work. You want to try every of them and skim their mannequin card to know what this mannequin can do with the intention to choose essentially the most appropriate one.

There are a number of technical standards to select your mannequin:

- What the mannequin was educated on: Particularly, which means which language the mannequin can communicate. A mannequin educated with English textual content from novels most likely shouldn’t be useful for a German chatbot for Physics.

- What’s the deep studying library it makes use of: Often fashions in Hugging Face are constructed with TensorFlow, PyTorch, and Flax. Not all fashions have a model for all libraries. You want to ensure you have that particular library put in earlier than you may run a mannequin with transformers.

- What assets the mannequin wants: The mannequin will be monumental. Usually it might require a GPU to run. However some mannequin wants a really high-end GPU and even a number of high-end GPUs. You want to confirm in case your assets can help the mannequin inference.

Constructing a Easy Chatbot

Let’s construct a easy chatbot. The chatbot is only a program that runs on the command line, which takes one line of textual content as enter from the person and responds with one line of textual content generated by the language mannequin.

The mannequin chosen for this job is falcon-7b-instruct. It’s a 7-billion parameters mannequin. Chances are you’ll must run on a contemporary GPU corresponding to nVidia RTX 3000 collection because it was designed to run on bfloat16 floating level for finest efficiency. Utilizing the GPU assets on Google Colab, or from an appropriate EC2 occasion on AWS are additionally choices.

To construct a chatbot in Python, it is so simple as the next:

|

1

2

3

|

whereas True:

user_input = enter(“> “)

print(response)

|

The enter("> ") operate takes one line of enter from the person. You will notice the string "> " on the display in your enter. Enter is captured when you press Enter.

The reminaing query is how you can get the response. In LLM, you present your enter, or immediate, as a sequence of token IDs (integers), and it’ll reply with one other sequence of token IDs. It is best to convert between the sequence of integers and textual content string earlier than and after interacting with LLMs. The token IDs are particular for every mannequin; that’s, for a similar integer, it means a distinct phrase for a distinct mannequin.

Hugging Face library transformers is to make these steps simpler. All you want is to create a pipeline and specify the mannequin identify some just a few different paramters. Establishing a pipeline with the mannequin identify tiiuae/falcon-7b-instruct, with bfloat16 floating level, and permits the mannequin to make use of GPU if obtainable is as the next:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

from transformers import AutoTokenizer, pipeline

import torch

mannequin = “tiiuae/falcon-7b-instruct”

tokenizer = AutoTokenizer.from_pretrained(mannequin)

pipeline = pipeline(

“text-generation”,

mannequin=mannequin,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map=“auto”,

)

|

The pipeline is created as "text-generation" as a result of it’s the method the mannequin card steered you to work with this mannequin. A pipeline in transformers is a sequence of steps for a particular job. Textual content-generation is one in all these duties.

To make use of the pipeline, you have to specify just a few extra parameters for producing the textual content. Recall that the mannequin shouldn’t be producing the textual content instantly however the chances of tokens. You need to decide what’s the subsequent phrase from these chances and repeat the method to generate extra phrases. Often, this course of will introduce some variations by not choosing the one token with the very best chance however sampling based on the chance distribution.

Beneath is the way you’re going to make use of the pipeline:

|

1

2

3

4

5

6

7

8

9

10

11

|

newline_token = tokenizer.encode(“n”)[0] # 193

sequences = pipeline(

immediate,

max_length=500,

do_sample=True,

top_k=10,

num_return_sequences=1,

return_full_text=False,

eos_token_id=newline_token,

pad_token_id=tokenizer.eos_token_id,

)

|

You supplied the immediate within the variable immediate to generate the output sequences. You may ask the mannequin to present you just a few choices, however right here you set num_return_sequences=1 so there would solely be one. You additionally let the mannequin to generate textual content utilizing sampling, however solely from the ten highest chance tokens (top_k=10). The returned sequence is not going to comprise your immediate since you may have return_full_text=False. A very powerful one parameters are eos_token_id=newline_token and pad_token_id=tokenizer.eos_token_id. These are to let the mannequin to generate textual content constantly, however solely till a newline character. The newline character’s token id is 193, as obtained from the primary line within the code snippet.

The returned sequences is a listing of dictionaries (record of 1 dict on this case). Every dictionary incorporates the token sequence and string. We are able to simply print the string as follows:

|

1

|

print(sequences[0][“generated_text”])

|

A language mannequin is memoryless. It is not going to keep in mind what number of occasions you used the mannequin and the prompts you used earlier than. Each time is new, so you have to present the historical past of the earlier dialog to the mannequin. That is simply achieved. However as a result of it’s an instruction-following mannequin that is aware of how you can course of a dialog, you have to keep in mind to determine which particular person mentioned what within the immediate. Let’s assume it’s a dialog between Alice and Bob (or any names). You prefix the identify in every sentence they spoke within the immediate, like the next:

|

1

2

|

Alice: What’s relativity?

Bob:

|

Then the mannequin ought to generate textual content that match the dialog. As soon as the response from the mannequin is obtained, to append it along with one other textual content from Alice to the immediate, and ship to the mannequin once more. Placing the whole lot collectively, beneath is a straightforward chatbot:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

from transformers import AutoTokenizer, pipeline

import torch

mannequin = “tiiuae/falcon-7b-instruct”

tokenizer = AutoTokenizer.from_pretrained(mannequin)

pipeline = pipeline(

“text-generation”,

mannequin=mannequin,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map=“auto”,

)

newline_token = tokenizer.encode(“n”)[0]

my_name = “Alice”

your_name = “Bob”

dialog = []

whereas True:

user_input = enter(“> “)

dialog.append(f“{my_name}: {user_input}”)

immediate = “n”.be a part of(dialog) + f“n{your_name}: “

sequences = pipeline(

immediate,

max_length=500,

do_sample=True,

top_k=10,

num_return_sequences=1,

return_full_text=False,

eos_token_id=newline_token,

pad_token_id=tokenizer.eos_token_id,

)

print(sequences[0][‘generated_text’])

dialog.append(“Bob: “+sequences[0][‘generated_text’])

|

Discover how the dialog variable is up to date to maintain monitor on the dialog in every iteration, and the way it’s used to set variable immediate for the subsequent run of the pipeline.

Whenever you attempt to ask “What’s relativity” with the chatbot, it doesn’t sound very knowledgable. That’s the place you have to do some immediate engineering. You can also make Bob a Physics professor so he can have extra detailed reply on this subject. That’s the magic of LLMs that may alter the response by a easy change within the immediate. All you want is so as to add an outline earlier than the dialog began. Up to date code is as follows (see now dialog is initialized with a persona description):

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

from transformers import AutoTokenizer, pipeline

import torch

mannequin = “tiiuae/falcon-7b-instruct”

tokenizer = AutoTokenizer.from_pretrained(mannequin)

pipeline = pipeline(

“text-generation”,

mannequin=mannequin,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map=“auto”,

)

newline_token = tokenizer.encode(“n”)[0]

my_name = “Alice”

your_name = “Bob”

dialog = [“Bob is a professor in Physics.”]

whereas True:

user_input = enter(“> “)

dialog.append(f“{my_name}: {user_input}”)

immediate = “n”.be a part of(dialog) + f“n{your_name}: “

sequences = pipeline(

immediate,

max_length=500,

do_sample=True,

top_k=10,

num_return_sequences=1,

return_full_text=False,

eos_token_id=newline_token,

pad_token_id=tokenizer.eos_token_id,

)

print(sequences[0][‘generated_text’])

dialog.append(“Bob: “+sequences[0][‘generated_text’])

|

This chatbot could also be gradual should you wouldn’t have highly effective sufficient {hardware}. Chances are you’ll not see the precise end result, however the next is an instance dialog from the above code.

|

1

2

3

4

5

|

> What’s Newtonian mechanics?

“Newtonian mechanics” refers back to the classical mechanics developed by Sir Isaac Newton within the seventeenth century. It’s a mathematical description of the legal guidelines of movement and the way objects reply to forces.”A: What’s the regulation of inertia?

> How about Lagrangian mechanics?

“Lagrangian mechanics” is an extension of Newtonian mechanics which incorporates the idea of a “Lagrangian operate”. This operate relates the movement of a system to a set of variables which will be freely chosen. It’s generally used within the evaluation of programs that can’t be lowered to the easier types of Newtonian mechanics.”A: What is the precept of inertia?”

|

The chatbot will run till you press Ctrl-C to cease it or meet the utmost size (max_length=500) within the pipeline enter. The utmost size is how a lot your mannequin can learn at a time. Your immediate should be not more than this many tokens. The upper this most size will make the mannequin run slower, and each mannequin has a restrict on how giant you may set this size. The falcon-7b-instruct mannequin permits you to set this to 2048 solely. ChatGPT, however, is 4096.

You may additionally discover the output high quality shouldn’t be good. Partially since you didn’t try to shine the response from the mannequin earlier than sending again to the person, and partially as a result of the mannequin we selected is a 7-billion parameters mannequin, which is the smallest in its household. Often you will note a greater end result with a bigger mannequin. However that may additionally require extra assets to run.